Our sector publishes no shortage of evaluations, reviews and reports that traffic in ‘lessons learned’. Yet contrary to the cosy idea of having learned lessons, this new HPG report from Crawford, Holloway, et al highlights a repetition of shortcomings, such as the quintessential agency turf battle that manifests in debates over how the crisis was framed and thus responded to (in this case whether Ebola Virus Disease (EVD) was primarily a vertical health crisis or had fused with and fuelled the longstanding conflict and multi-dimensional political and economic crisis in Nord Kivu); and the failed imperative for such extraordinary expenditure (in this case, an estimated $1 billion) to leave behind permanent infrastructure and capacity. These issues are anything but unfamiliar. They were unambiguously signposted by many reports on the 2014-16 outbreak in West Africa, including a previous HPG study (which I led), and in the evaluation reports of too many crises before that.

Certainly, we need to change ineffective and/or bad practice. Just as certainly, we need to change the impotent and/or worse practice of how we assess it. Reports are full of recommendations to agencies, donors, and governments. Perhaps it is time to shoot the messenger as well.

Why? As a starting point, because as ALNAP’s aptly titled “Missing the Point?” explains, too often evaluations and reviews focus on technical effectiveness and quantitative results while avoiding complexity and systemic issues. Or because, as IRC’s review of seven major reports on the West Africa outbreak concluded, a “persistent weakness” in the self-examination of our interventions is “the erasing of politics” that fundamentally influence the impact of our work.

Let us go further than those salient critiques. For humanitarian action to improve, we need evaluations and reviews to engage with systemic dysfunction, formulate recommendations that lead to change and equally to debate, and surface the tweak-proof complexity and values of our work.

Step 1: Time to stop sweeping our dirt under the carpet of ‘lessons learned’

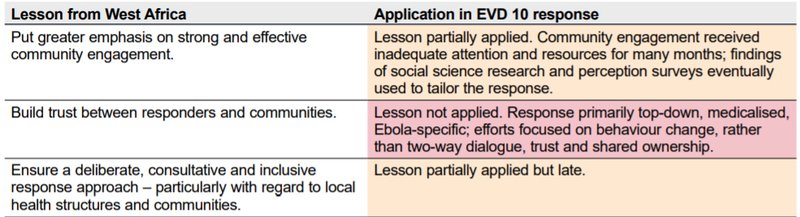

No theme better highlights this point than the persistence of counterproductive and dangerous poor practices when it comes to community engagement in an Ebola outbreak. We documented non-performance and poor performance five years ago in West Africa, clarifying that recommendations for community engagement were even then old ‘lessons’, persistently “identified but not […] learned.” HPG’s new report is exemplary in this regard, tracking these previous lessons against their application in DRC #10 (see section 6.1) without semantics: as illustrated in the table below, the crux of evaluation is not to assess whether past lessons were learned, but whether they were applied.

Step 2: Require a spade to be called a spade

TORs for evaluations, assessments, reviews, and for reports, studies or research should explicitly require authors, researchers and experts to identify potential areas of negligence, malpractice, transgression and abuse of power. Let’s be clear: at this stage of our sector’s evolution, not engaging meaningfully with a community should be identified as an abuse of power, not a programmatic deficiency.

Step 3: Recognise complexity, politics and power

Evaluations are quick to identify gaps, shortcomings and mistakes, with little contextualisation or historical understanding of why they occurred and, crucially, often zero analysis of why they continue to occur crisis after crisis. Where identified gaps and shortcomings represent symptoms, they must be accompanied by an analysis of their cause(s) and of the underlying architecture responsible for their persistence.

Step 4: Help agencies explore and understand dysfunction

Commitment to community engagement has proven easier said than done these past decades, as have similar, full-throated humanitarian commitments to localisation, ‘downward’ accountability or mainstreaming of gender programming. Their achievement requires dismantling their internal (and, where possible) external political impediments, and requires abolishing ‘lessons learned’ that simply identify poor practice and then ask responders to act differently the next time. At best, such a recommendation offers a platitude. More importantly, it serves to maintain the status quo because the unstated theory of change – just do it better next time – ignores the incentives, ideologies and structures that have historically militated against engaging with communities.

Step 5: Embrace values and ethics in addition to objectivity and effectiveness

The issue of community engagement highlights a place where Crawford, Holloway et al. might have pushed further. The report is damning in its assessment of the multiple failures to engage with communities, resulting in distrust, resentment, and even violence against the Ebola response; and resulting in the devastating prolongation of the outbreak. Yet the arguments in favour of community engagement, in favour of two-way communication, in favour of anthropological expertise to help break down barriers all treat community engagement as the means to the end of more effective programming and not as an end in itself. It has substantial pragmatic importance, but community engagement chiefly reflects a value. Evaluation and review must direct attention towards the principle of humanity and the ethical imperative that people have primacy in their own lives.

Step 6: Support external accountability of humanitarian actors

Increase the target audience (i.e., different content, not just wider dissemination) of evaluations and reports to include community organisers, journalists, and local government, to build a multi-dimensional accountability upon the sector. Give people and communities affected by crisis the insights with which they can hold humanitarian actors accountable, as well as the messengers of learning who often protect them from it.

In short, Crawford, Holloway et al.’s report exemplifies a critical shift in language and scope in the direction of accuracy and scrutiny; away from using evaluation and review to cloak failure, negligence, and institutional power beneath an inaccurate euphemism such as “lessons learned”. Humanitarian action needs to build on this practice, not shy away or protest against it.

Of course, the humanitarian sector can and does learn, especially those lessons of a more technocratic or programmatic nature. Constantly improving approaches for EVD treatment and the use of vaccination can be seen as further evidence of progress. The logic here is simple: said progress is dwarfed by intransigence on the strategic and systemic fronts. Evaluation and review must expand their scope and integrity if they are to stop reinforcing the serial offences and profound inequity of the status quo.